Experiments in Google Play Store A/B testing are important for optimising your product page and app listing. The logic and objective of this is simple: by testing app elements, you should be able to pinpoint the best-performing metadata and creative assets.

Increasing your app’s visibility through metadata optimization, higher category rating, or being featured is fantastic, but it’s only one aspect of ASO. If you do not turn those app impressions into downloads, you are limiting the potential of your app. This is where Google Play’s store listing experiments can come in handy for ASO practitioners.

Google Play store listing experiments allow you to run A/B tests using data to identify which creative or metadata components should be included on your app store listing page to assist enhance conversions.

This blog will provide you with an overview of Google Play Store listing experiments. We will also discuss how to begin testing your store listings, as well as the benefits and drawbacks of A/B testing.

WHAT IS GOOGLE PLAY STORE A/B TESTING?

A/B testing on the Google Play Store allows you to run experiments to find the best text and graphics for your app listing. A/B tests experiments are provided for custom store listing and main store listing pages.

Furthermore, you may run Google Play Store A/B testing by running variants against your current app listing version. Looking at the instal data can help you determine which versions will produce the best results.

WHY SHOULD YOU DO MOBILE A/B TESTING?

Before delving into the specifics, you need first to grasp the advantages of implementing store listing tests into your Google Play ASO approach.

Google Play store listing experiments let you run A/B testing to get significant, data-driven insights into the creative and metadata elements that appeal to your users the most.

Benefits of Google Play store listing experiments:

- Metadata identification: Identify the best metadata elements (name, short and long description) and creative elements that should be incorporated on your store listing page.

- Get more users: A/B testing insights can help you increase your app’s installs, conversion rate, and retention rate.

- Learn how users differ based on their locality: Learn what resonates (or not) with your target market, based on their language and locality.

- Receive crucial metrics to make data-driven decisions: Identify seasonality trends and takeaways that can be applied to your store listing page.

- Make your app Efficient: Improve the general knowledge about the efficiency of app elements

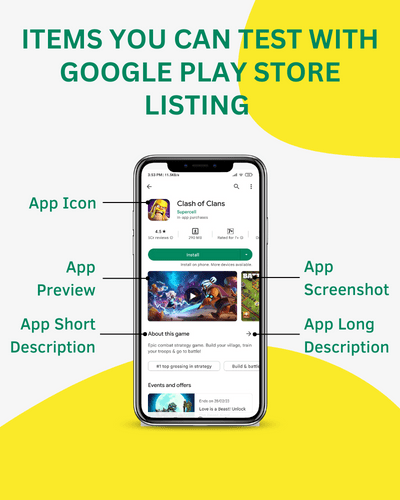

WHAT YOU CAN TEST WITH GOOGLE PLAY STORE LISTING EXPERIMENTS

Next, let’s evaluate the creative and metadata elements that can be tested through Google Play store listing experiments. The app store elements shown below can be A/B tested in up to five languages.

Icon

The icon for your app is the first creative element that customers see. First impressions are extremely important on Google Play because the icon is frequently the only creative seen in search results and can have a direct impact on your app conversion rate.

Short description

This is the second visible metadata element after your app’s title and can be up to 80 characters long. It displays just below the sample video and screenshots on your store listing page and is the second most weighted item for keyword indexation in Google’s algorithm, after the title. As a result, the short description of your app has a direct impact on app visibility and conversion.

Feature graphic & preview video

The preview video for your app is a short video clip that illustrates the primary value propositions of your app. The feature image comes before your app screenshots and overlays the preview video, taking up the majority of the device screen view. As a result, it is a valuable asset for conversion.

Screenshots

Screenshots emphasise your app’s major value propositions and provide customers with an idea of what to expect after downloading the app.

Long description

It has a character limit of 4,000 and is useful for keyword indexation. Although only a small number of people read the lengthy description, it has a direct impact on conversion rates for those that do.

HOW TO CREATE AND RUN AN A/B TEST STEP-BY-STEP

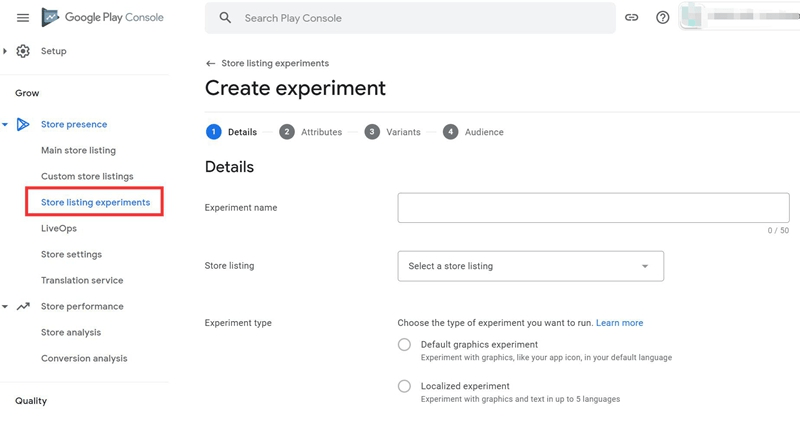

It’s time to use Google Play Console to setup and run your A/B test.

Log in to your Google Play Console account first, then select your app and go to the “Store listing experiments” tab under the “Store presence” tab.

Source: Google Play Developer Console

You will be taken to the setup screen, where you can create an experiment or A/B test.

Let’s go over each step from beginning to end.

STEP 1 – PREPARE AND CREATE THE A/B TESTING EXPERIMENT

The first step is to give your experiment a name. We recommend using a meaningful name that allows you to differentiate between the various studies you will execute. The test name is shown only to you and not to other Play Store visitors, so you should be able to tell what the test was about just by looking at it.

The second step is to select the type of store listing you want to test. If you do not use Custom store listing pages, the Main store listing will be your only option.

The next step is to select a type of experiment. You have 2 options here:

Localized test: You can test in up to 5 languages. Both creative and metadata elements should be tested.

Global test: Test users from all over the world in only one language. Only experiment with creative elements.

We advise that you use localised tests. What resonates with users the most varies dramatically between languages. A global test will make it difficult to extract any lessons that can be applied to individual territories. Choosing the language of your most significant markets will provide better insights into user behaviour in those markets.

Source: Google Play Developer Console

When you’re finished, click next to move on to the next stage.

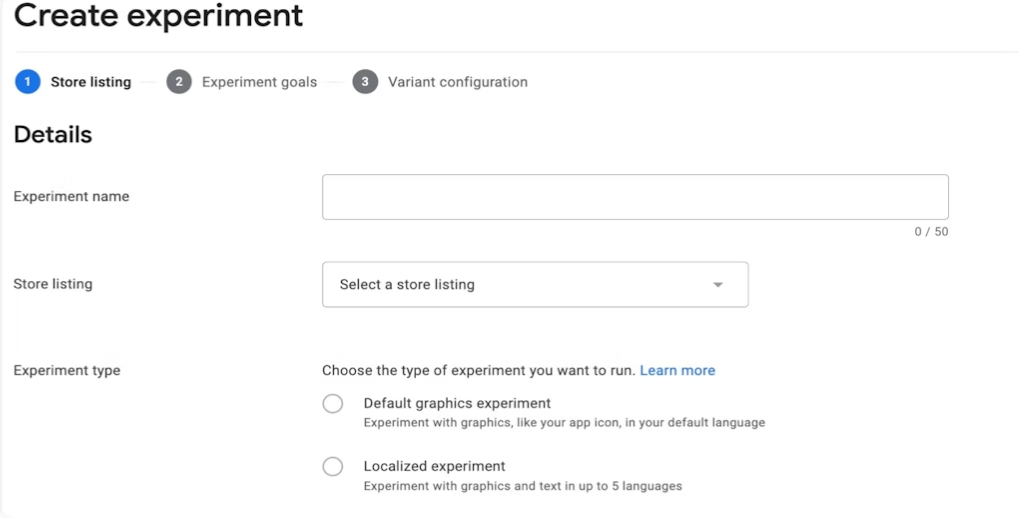

STEP 2 – SET UP THE A/B TESTING EXPERIMENT GOALS

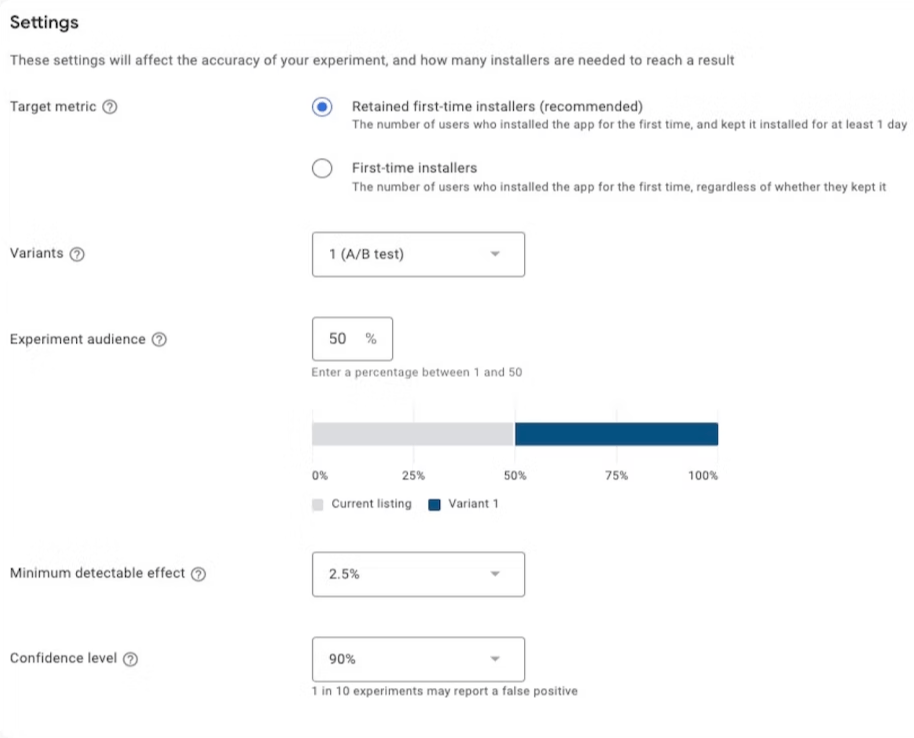

This is the section where you can set your experiment settings, which we described in the previous section of this guide. You want to get this right because the setting you select will affect the accuracy of your test as well as the number of app installs required to get your desired result.

Here is a detailed list of everything you need to know.

Target metric

The experiment outcome is decided by the target metric. You have the option of using Retained first-time installers or First-time installers. The first choice is advised since you want to target customers who keep your app installed for at least one day.

Variants

You can specify the number of versions to test against the current store listing here. In general, evaluating a single version will take less time to complete. Google Play Console will display the number of instals required next to each choice.

It is entirely up to you how many variants you wish to test, although we recommend starting with one until you become more experienced with it.

Experiment audience

In the experiment audience setting, you can specify the percentage of store listing visitors who will view an experimental variant rather than your current listing. If you test additional versions (for example, A/B/C), the testing audience will be evenly divided among all A/B experimental variants. For your studies, each testing version will get the equal traffic.

Minimum detectable effect (MDE)

you can select the detectable value that Google Play will use when determining if the test was successful. You can select pre-set percentages from the drop-down menu and get Google Play estimates, that is, how many instals you will need to reach a specific MDE.

Confidence level

This is a new option added to Google Play’s Store listing experiments recently. Previously, you couldn’t pick between four confidence intervals. The greater your level of confidence, the more accurate your Store listing experiment outcomes will be.

Furthermore, greater confidence levels reduce the likelihood of a false positive, but they require more instals to achieve.

As a general guideline, we recommend using a 95% confidence level, as this is an industry norm for testing in general.

Source: Google Play Developer Console

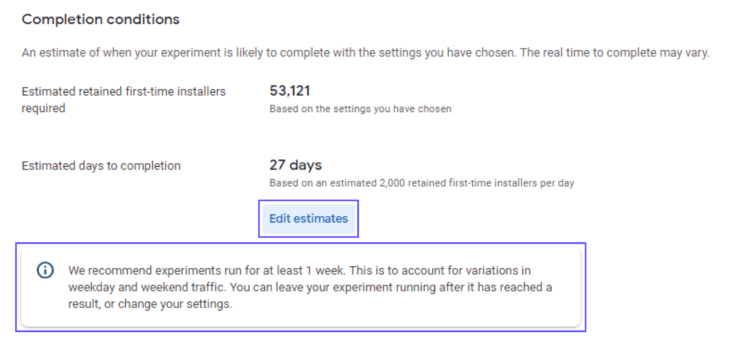

Completion conditions

The final section of this phase outlines how long your experiment is expected to take and how many first-time installers you will need to finish it.

You can modify the estimates by selecting the “Edit estimates” button, and then go to the next stage if you are satisfied.

Source: Google Play Developer Console

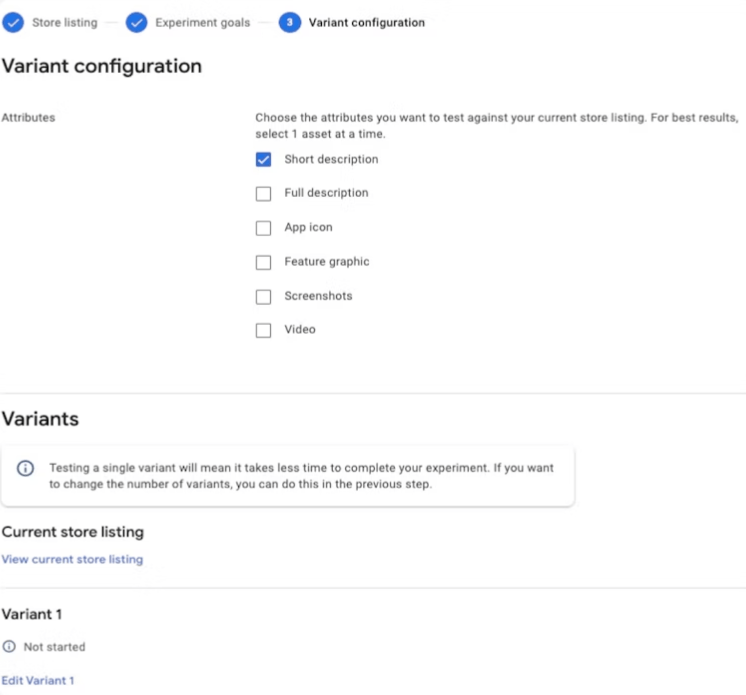

STEP 3 – VARIANT CONFIGURATION IN A/B TESTING

You are now at the point where you may select whatever attribute to test and how the test variant will look.

As previously said, you can select from six distinct elements, and app descriptions will be provided only if you perform a localised experiment.

It is recommended to test one attribute at a time and to execute only one attribute test for each localization.

You will have one or more testing variants that you can customise depending on the number of versions you choose to test in the earlier stage. Each test version must have a name and the text or image that you want to compare to the current store listing.

Source: Google Play Developer Console

Once you set up your variants and are satisfied with your current setting, click on “Start experiment,” and Google Play will soon make you’re A/B testing experiments live.

MEASURING AND ANALYZING YOUR TEST RESULTS

Every test you make will appear in the “Store listing experiments” page. The first step before doing any analysis is to let Google Play run the data for at least seven days to prevent weekend impacts and ensure you have enough data.

For each test you run, Google Play can give you lots of additional data which you can follow during the experiment. Google Play will display the best-performing test versions, but there are a few other factors to consider.

Here are the five factors to consider when evaluating & analysing the results:

- Keep in mind the seasonality effect (holiday season, you might see unusual uplifts in results)

- Consider external factors that Google Play doesn’t show

- Google Play testing can result in false positives. To check if this is the case, you can run a B/A test after to check if your B variant will perform the same against the A variant. But an even better way would be to run an A/B/B test.

- You should always carefully examine the results even if you do not follow Google Play recommendations.

- Monitor your conversion rates and compare them to the performance prior to the deployment if you use the testing findings on your real store listing page. Annotate your tests in your KPI report and check their performance.

PROS AND CONS OF MOBILE APP A/B TESTING STORE LISTING EXPERIMENTS

A/B testing in Google Play has both advantages and disadvantages, in our opinion. Here is a list of the good and bad things about the Store listing experiments.

A/B testing in Google Play Store listing experiment pros

- Free tool to test latest trends & new ideas for improving app marketing

- Easy to set up & run A/B testing in Google Play Store listing experiment

- test big and small changes with Store listing experiments and get reliable results.

- Get detailed data about your test variant

- Helps design team to create best screenshots/ videos for the app

A/B testing in Google Play Store listing experiment cons

Some of the positive elements can also come with risks at the same time.

- There is a chance that you’re A/B test variant will perform worse than the existing store listing. As a result, it makes sense to test substantial changes with a smaller percentage of traffic first, and then scale them up to a larger audience size.

- big and significant change Creating tests involves much strategy, preparation, and resources.

- It might be time-consuming to test substantial changes on a regular basis. Not only will you need a lot of ideas, but testing completely various app modifications one after the other and with little time difference may be counterproductive.

BEST PRACTICES & THINGS TO REMEMBER FOR STORE LISTING EXPERIMENTS

The general testing advice is to test one thing at a time. Still, testing several changes may result in a more statistically significant outcome and improved performance than testing each piece alone.

When doing A/B tests, we recommend that you consider the following factors:

1. Create A/B testing plan

Evaluate the testing ideas before you begin. You should know that you can experiment with different image headlines, splash screenshots, screenshot order, snapshot style (e.g., emotional vs. fact-oriented), messages, and so on.

2. Set up clear basic testing rules

If you’re just getting started with A/B testing on Google Play, try testing one element and one hypothesis at a time. Also, before drawing conclusions, run each test for at least one week.

3. Understand why you want to track something

Keep track of what you change and why a given modification should improve app performance.

4. Strong hypothesis

Having a strong hypothesis is the most important component of A/B testing. For example, you might use the same screenshot types for all localizations and want to customize them for the local audience. In this scenario, a solid hypothesis would be that localising screenshots and messages will enhance the conversion rate from store listing visitors to app instals by at least 5%.

5. Learn from past tests

Negative tests should not be viewed as failures; rather, they should be viewed as an opportunity to learn about what your potential consumers dislike.

6. Limited Number of testing attributes

Testing too many items at once might lead to confusion and a lack of a clear picture. It’s difficult to identify which factor contributed the most to better performance. In short, avoid combining video, image, and description changes.

FINAL WORDS

We hope this has helped you understand how Store listing experiments function. One of the most typical ASO tactics you should do is A/B testing studies.

Store listing tests, for example, are free to use and offer basic retention analytics such as retained installers after one day. Because you can select confidence levels, detectable effects, split test variations, and much more, store listing studies are highly powerful and simple to perform.

Despite the limitations, you should incorporate and use this tool as much as possible in your regular Google Play optimizations.